Basically one of the most interesting built in algorithms in OpenCV are feature detectors. In this tutorial I will cover only SURF algorithm, but be aware SURF and SIFT algorithm is not free, and shouldn't be used for commercial purposes only educational. Hopefully you will be able to modify the codefor other feature detectors as well (the free ones), like ORB, FAST, MSER, STAR, BRIEF etc.

Update(02.07.2013): Finally I added a working source code, precompiled for viewing. Hopefully it will clear out some missundertanding of how to use the code. Apperantly this simple article has gotten fairly popular over the year.

Download source code: SURF_test.zip (27.96M)

(Might not work if the opencv library isn't linked properly in your system path)

Well I know this website is not so popular, and I guess you have already checked the openCV documentation about feature detection.

The thing is my code is pretty much based on that sample code: http://opencv.itseez.com/doc/tutorials/features2d/feature_homography/feature_homography.html#feature-homography

I'm only going to cover the SURF part, I assume you know how to read an image into OpenCV Mat, if you don't then check this out:

http://opencv.itseez.com/doc/tutorials/introduction/display_image/display_image.html#display-image

Basically what you first want to do, is read two images from file "scene" and "object" image.

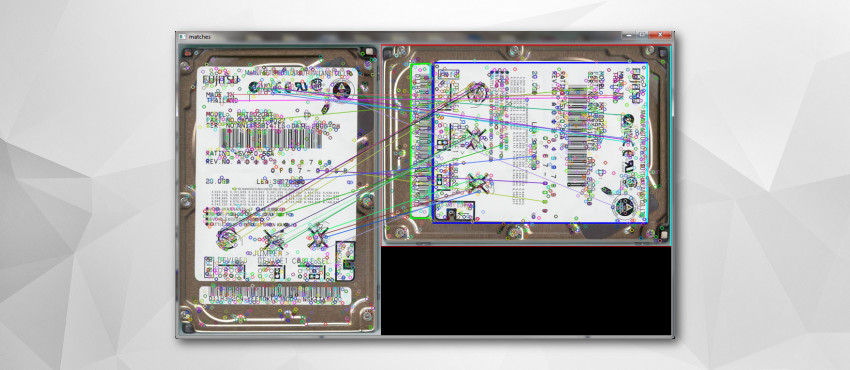

What feature algorithms do, is they then find keypoints in those two images and calculate their descriptors. And the descriptors are the ones which we will compare to each other and determine whether the object was found in the scene or not.

I'm not going to try and explain how SURF or any other algoithm works, for that matter you will have to consult wikipedia or other website yourself. Trust me it's pretty complicated, luckily for us everything is built into the OpenCV library.

First you have to include "features2d" library into your project:

#include "opencv2\features2d\features2d.hpp"

#include "opencv2\nonfree\features2d.hpp" //This is where actual SURF and SIFT algorithm is located

So first of all, we want to extract keypoints and calculate their descriptors. To do that, declare vector of keypoints and matrix of descriptors.

//vector of keypoints

vector< cv::KeyPoint > keypointsO; //keypoints for object

vector< cv::KeyPoint > keypointsS; //keypoints for scene

//Descriptor matrices

Mat descriptors_object, descriptors_scene;

Now lets declare an SURF object which will actually extract keypoints, calculate descriptors and save them in memory. When decleraing SURF object you have to provide the minimum hessian value, the smaller it is the more keypoints your program would be able to find with the cost of performance. From my testing 1500 is low enough most of the times, but it may vary from application to application.

SurfFeatureDetector surf(1500);

surf.detect(sceneMat,keypointsS);

surf.detect(objectMat,keypointsO);

Next step is to calculate the descriptors:

SurfDescriptorExtractor extractor;

extractor.compute( sceneMat, keypointsS, descriptors_scene );

extractor.compute( objectMat, keypointsO, descriptors_object );

After we have the descriptors for the object and scene, now we have to do the actual comparison or should I say object detection. first you have to choose the matcher. I'm going to use FlannBasedMatcher, because from my experiments it was the fastest, but if you want you can use Brute Force matcher as well, I will show how to use both of them.

//Declering flann based matcher

FlannBasedMatcher matcher;

//BFMatcher for SURF algorithm can be either set to NORM_L1 or NORM_L2.

//But if you are using binary feature extractors like ORB, //instead of NORM_L* you use "hamming"

BFMatcher matcher(NORM_L1);

Comment or delete the matcher you are not using. Ok so now let's perform the actual comparison. We will be using nearest neighbor matching, which is built in OpenCV library, If you want to further understand this google is your friend.

vector< vector< DMatch > > matches;

matcher.knnMatch( descriptors_object, descriptors_scene, matches, 2 ); // find the 2 nearest neighbors

Ok so after matching, we have to discard invalid results, basically we have to filter out the good matches. To do that we will be using Nearest Neighbor Distance Ratio. It is pretty straight forward just check the code.

vector< DMatch > good_matches;

good_matches.reserve(matches.size());

for (size_t i = 0; i < matches.size(); ++i)

{

if (matches[i].size() < 2)

continue;

const DMatch &m1 = matches[i][0];

const DMatch &m2 = matches[i][1];

if(m1.distance <= nndrRatio * m2.distance)

good_matches.push_back(m1);

}

By now we have all the good matches stored in memory. One thing I have experimentally learned, is that when you have 7 and more good_matches you can assume the object has been found, and do whatever you want to do, in my example we will draw boundty around the detected object. And again the determening value can change for you, so you should check it out on your own.

Ok now let's extract the coordinates of the good matches from object and scene so we can find homography, which we are going to use to find the boundry of object in scene. Rest of the tutorial is more or less copy and paste from the OpenCV documentation

std::vector< Point2f > obj;

std::vector< Point2f > scene;

for( unsigned int i = 0; i < good_matches.size(); i++ )

{

//-- Get the keypoints from the good matches

obj.push_back( keypointsO[ good_matches[i].queryIdx ].pt );

scene.push_back( keypointsS[ good_matches[i].trainIdx ].pt );

}

Mat H = findHomography( obj, scene, CV_RANSAC );

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector< Point2f > obj_corners(4);

obj_corners[0] = cvPoint(0,0); obj_corners[1] = cvPoint( objectP.cols, 0 );

obj_corners[2] = cvPoint( objectP.cols, objectP.rows ); obj_corners[3] = cvPoint( 0, objectP.rows );

std::vector< Point2f > scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H);

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

line( outImg, scene_corners[0] , scene_corners[1], color, 2 ); //TOP line

line( outImg, scene_corners[1] , scene_corners[2], color, 2 );

line( outImg, scene_corners[2] , scene_corners[3], color, 2 );

line( outImg, scene_corners[3] , scene_corners[0] , color, 2 );

And that's it you Sir or Madam, have yourself a code for detecting objects in C++. To see how it all fits together check the full code below. Hope it was useful to you, because I remember when I first started learning how to use OpenCV, it was very confusing. I would encourage you to do some studies about how actually all these algorihtms work, I just provided you the way how to use it with OpenCV, but my task wasn't to explain how it works, so you'll have to do it somewhere else.

bool findObjectSURF( Mat objectMat, Mat sceneMat, int hessianValue )

{

bool objectFound = false;

float nndrRatio = 0.7f;

//vector of keypoints

vector< cv::KeyPoint > keypointsO;

vector< cv::KeyPoint > keypointsS;

Mat descriptors_object, descriptors_scene;

//-- Step 1: Extract keypoints

SurfFeatureDetector surf(hessianValue);

surf.detect(sceneMat,keypointsS);

if(keypointsS.size() < 7) return false; //Not enough keypoints, object not found

surf.detect(objectMat,keypointsO);

if(keypointsO.size() < 7) return false; //Not enough keypoints, object not found

//-- Step 2: Calculate descriptors (feature vectors)

SurfDescriptorExtractor extractor;

extractor.compute( sceneMat, keypointsS, descriptors_scene );

extractor.compute( objectMat, keypointso, descriptors_object );

//-- Step 3: Matching descriptor vectors using FLANN matcher

FlannBasedMatcher matcher;

descriptors_scene.size(), keypointsO.size(), keypointsS.size());

std::vector< vector< DMatch > > matches;

matcher.knnMatch( descriptors_object, descriptors_scene, matches, 2 );

vector< DMatch > good_matches;

good_matches.reserve(matches.size());

for (size_t i = 0; i < matches.size(); ++i)

{

if (matches[i].size() < 2)

continue;

const DMatch &m1 = matches[i][0];

const DMatch &m2 = matches[i][1];

if(m1.distance <= nndrRatio * m2.distance)

good_matches.push_back(m1);

}

if( (good_matches.size() >=7))

{

cout << "OBJECT FOUND!" << endl;

std::vector< Point2f > obj;

std::vector< Point2f > scene;

for( unsigned int i = 0; i < good_matches.size(); i++ )

{

//-- Get the keypoints from the good matches

obj.push_back( keypointsO[ good_matches[i].queryIdx ].pt );

scene.push_back( keypointsS[ good_matches[i].trainIdx ].pt );

}

Mat H = findHomography( obj, scene, CV_RANSAC );

//-- Get the corners from the image_1 ( the object to be "detected" )

std::vector< Point2f > obj_corners(4);

obj_corners[0] = cvPoint(0,0); obj_corners[1] = cvPoint( objectMat.cols, 0 );

obj_corners[2] = cvPoint( objectMat.cols, objectMat.rows ); obj_corners[3] = cvPoint( 0, objectMat.rows );

std::vector< Point2f > scene_corners(4);

perspectiveTransform( obj_corners, scene_corners, H);

//-- Draw lines between the corners (the mapped object in the scene - image_2 )

line( outImg, scene_corners[0] , scene_corners[1], color, 2 ); //TOP line

line( outImg, scene_corners[1] , scene_corners[2], color, 2 );

line( outImg, scene_corners[2] , scene_corners[3], color, 2 );

line( outImg, scene_corners[3] , scene_corners[0] , color, 2 );

objectFound=true;

}

else {

cout << "OBJECT NOT FOUND!" << endl;

}

cout << "Matches found: " << matches.size() << endl;

cout << "Good matches found: " << good_matches.size() << endl;

return objectFound;

}

Note: in the full source code "outImg" is a global matrix, where I load the original scene image.